To help you get up and running with deep learning and inference on ASUS Tinker Edge T, this article will show you how to perform real-time pose detection.

PoseNet is a vision model that can be used to determine the pose of a person in an image or video by estimating where key body joints are. Please note that this algorithm does not recognize or identify people who appear in an image or video.

Posture recognition has been widely applied in fields such as physical training, environmental awareness, human-computer-interaction, surveillance systems and health care for the elderly.

Falls are the leading cause of both fatal and nonfatal injuries for people aged 65+. Falls can result in hip fractures, broken bones and head injuries. Pose detection technology has the potential to help hospitals and healthcare staff know when elderly patients in their care want to get out of bed or a chair, so they can provide assistance in time. This technology could be particularly helpful in hospitals and healthcare centers that face staff shortages.

Google PoseNet is built on the Tinker Edge T image. For usage instructions, please continue reading.

To start, connect a USB camera — like the one shown below —to Tinker Edge T.

Power on Tinker Edge T and launch the terminal by clicking on the red box as shown in the image below.

In the terminal, type the following commands to go the project directory and run a simple camera example that streams the camera image through PoseNet and draws the pose as an overlay on the image.

$ cd /usr/share/project-posenet

$ python3 pose_camera.py --videosrc=/dev/video2

The argument --videosrc specifies the source of the camera image. In this example, /dev/video2 is the USB camera we just connected. For more information about available arguments, type -h follwing the command as shown below.

$ python3 pose_camera.py -h

usage: pose_camera.py [-h] [--mirror] [--model MODEL]

[--res {480x360,640x480,1280x720}] [--videosrc VIDEOSRC]

[--h264]

optional arguments:

-h, --help show this help message and exit

--mirror flip video horizontally (default: False)

--model MODEL .tflite model path. (default: None)

--res {480x360,640x480,1280x720}

Resolution (default: 640x480)

--videosrc VIDEOSRC Which video source to use (default: /dev/video0)

--h264 Use video/x-h264 input (default: False)

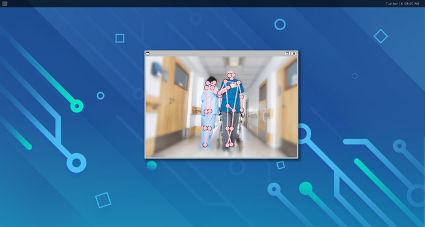

After successfully running the example project, you should see an image similar to the ones shown below..

For additional information, please refer to https://github.com/google-coral/project-posenet.